Artificial intelligence (AI) has been attracting headlines for some time now. Right from ChatGPT to Elon Musk’s Grok, AI is at the forefront of interest at the moment. Recently, on February 21, 2024, Google’s newly launched Gemma was added to the list. Let’s dive in and understand everything about this remarkable innovation.

What is Gemma?

Gemma represents a lineage of advanced, lightweight open models stemming from the innovative research and technology behind the Gemini models. Crafted by Google DeepMind and various Google teams, Gemma draws inspiration from Gemini, with its name derived from the Latin term signifying “precious stone.” Alongside the release of our model weights, we’re providing developers with tools aimed at nurturing innovation, promoting collaboration, and facilitating the responsible utilization of Gemma models.

Gemma represents a lineage of advanced, lightweight open models stemming from the innovative research and technology behind the Gemini models. Crafted by Google DeepMind and various Google teams, Gemma draws inspiration from Gemini, with its name derived from the Latin term signifying “precious stone.” Alongside the release of our model weights, we’re providing developers with tools aimed at nurturing innovation, promoting collaboration, and facilitating the responsible utilization of Gemma models.

Let’s have a brief look at its features:

- Compact Design: It comes in two versions, one with 2 billion parameters and another with 7 billion. These are much smaller compared to other similar models. This means the AI model runs faster and doesn’t need as much computing power, making it great for regular computers and even phones.

- Open to Everyone: Unlike many other advanced models, its code and settings are free for anyone to use. This allows developers and researchers to play around with it, change it, and help make it better.

- Tailored for Specific Tasks: This AI model isn’t just one-size-fits-all. It also has versions designed especially for certain jobs, like answering questions or summarizing text. This makes it better at those tasks and more flexible for different uses in the real world.

How Does Gemma Work?

Google has indicated that Gemma benefits from key technical and infrastructure components shared with Gemini, its most advanced AI model thus far. Leveraging this underlying technology, both 2B and 7B have demonstrated exceptional performance relative to other open models, earning recognition for their top-tier capabilities within their respective size categories.

It has notably surpassed significantly larger models on important benchmarks while upholding stringent standards for generating safe and reliable outputs.

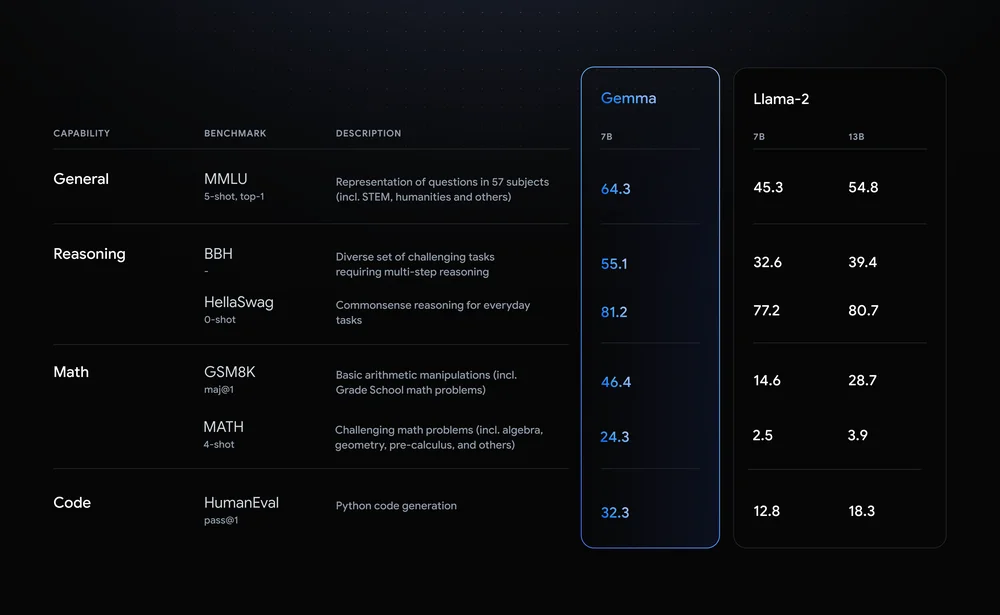

According to data provided by the tech giant, Gemma 7B excelled when compared to Meta’s Llama 2 7B across various domains such as reasoning, mathematics, and code generation. For instance, the AI model achieved a score of 55.1 in reasoning on the BBH benchmark, surpassing Llama 2’s score of 32.6. In mathematics, Gemma obtained a score of 46.4 under the GSM8K benchmark, outperforming Llama 2’s score of 14.6. Moreover, it exhibited superior performance in complex mathematical problem-solving, scoring 24.3 in the MATH 4-shot benchmark, compared to Llama 2’s score of 2.5. Additionally, Google’s model surpassed Llama 2 in Python code generation, scoring 32.3 compared to Llama 2’s score of 12.8.

How is Gemma Optimized across Frameworks?

How is Gemma Optimized across Frameworks?

It offers the flexibility to fine-tune its models on your data, allowing for customization to suit specific application requirements such as retrieval-augmented generation (RAG) or summarization. It supports a diverse range of tools and systems, including

- Multi-framework Tools: It accommodates various frameworks, including Keras 3.0,, JAX, Hugging Face Transformers, and native PyTorch, providing reference implementations for both inference and fine-tuning.

- Cross-device Compatibility: These models are designed to run seamlessly across a wide array of device types, including laptops, desktops, IoT devices, mobile phones, and cloud platforms, ensuring widespread accessibility to AI capabilities.

- Cutting-edge Hardware Platforms: In collaboration with NVIDIA, Gemma has been optimized for NVIDIA GPUs, spanning from data centers to cloud environments to local RTX AI PCs. This optimization guarantees top-notch performance and seamless integration with state-of-the-art hardware technology.

- Optimized for Google Cloud: Through Google Cloud’s Vertex AI, Gemma benefits from a comprehensive MLOps toolset, offering a plethora of tuning options and streamlined deployment processes with built-in inference optimizations. Users can further customize their deployment experience through self-managed GKE or fully-managed Vertex AI tools, leveraging cost-efficient infrastructure options across GPU, TPU, and CPU resources provided by either platform.

Is Google’s Gemma Free?

Google’s Gemma is freely available for use. The models are accessible at no cost on platforms such as Kaggle and Google Colab, complemented by exclusive credits for new users of Google Cloud. Additionally, researchers have the opportunity to request Google Cloud credits, extending up to $500,000, to expedite the progress of their projects. This model is intentionally crafted to promote accessibility, fostering widespread adoption and fostering innovation within the community.

Decoding the Future of AI Model

This AI model’s open-source nature and remarkable performance have generated considerable excitement within the LLM community.

What does the future hold for this emerging family of models?

- Advancements in the LLM: Its open-source framework promotes collaboration and innovation. Researchers and developers globally can contribute to its enhancement, hastening progress in critical areas such as interpretability, fairness, and efficiency. Gemma could be the one to explore new kinds of smart models that can handle more than just text. These models might be able to understand and create images, sounds, and videos too.

- Optimistic Outlook: With its inclusive approach and impressive capabilities, this model represents a significant stride towards democratizing AI for everyone’s benefit. As development continues, anticipate even more groundbreaking applications and advancements. Model’s open-source nature cultivates a dynamic community, ensuring its ongoing evolution and impact on the future of LLMs.

The Bottom Line

The arrival of these innovative technologies is making Google’s AI lineup stronger, but it’s also a big win for the open-source community. Now, researchers have more options like Gemma, Llama 2, and Mistral 7B to try out and come up with new ideas. These models being open-source means that people can work together to make them better, speeding up progress in understanding language and shaping the future of AI.

As these open-source tools get better, we’ll likely see even more powerful language models being created, which will narrow the gap between models that are open to everyone and those that aren’t.

However, whether these advancements end up being used for good or bad is still up to the people who use them, at least for now.