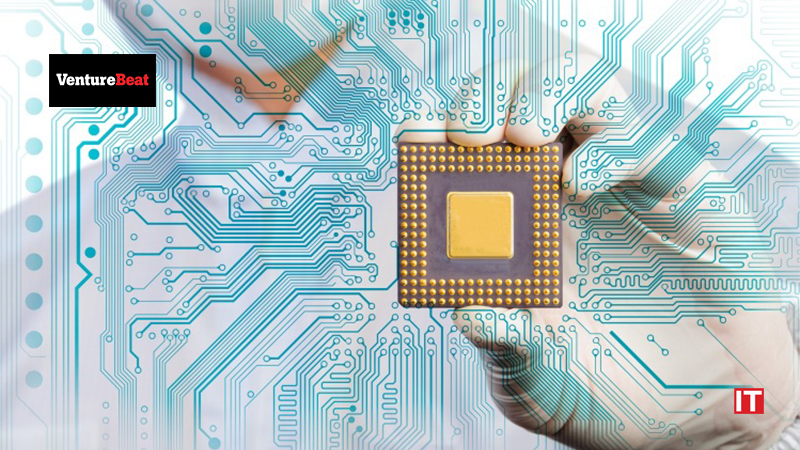

The road to more powerful AI-enabled data centers and supercomputers is going to be paved with more powerful silicon. And if Nvidia has its way, much of the silicon innovation will be technology it has developed.

At the Computex computer hardware show in Taipei today, Nvidia announced a series of hardware milestones and new innovations to help advance the company’s aspirations. A key theme is enhancing performance to enable more data intelligence and artificial intelligence for use cases.

AI is transforming every industry by infusing intelligence into every customer engagement,” Paresh Kharya, senior director of product management at Nvidia, said during a media briefing. “Data centers are transforming into AI factories.”

Grace superchip is building block of AI factory

One of the key technologies that will help enable Nvidia’s vision is the company’s Grace superchips. At Computex, Nvidia announced that multiple hardware vendors, including ASUS, Foxconn Industrial Internet, GIGABYTE, QCT, Supermicro and Wiwynn, will build Grace base systems that will begin to ship in the first half of 2023. Nvidia first announced the Grace central processing unit (CPU) superchip in 2021 as an ARM-based architecture for AI and high-performance computing workloads

Also Read: Elizabeth Glaser Pediatric AIDS Foundation takes Global Operations to the Cloud with Unit4 ERPx

Kharya said the Grace superchip will be available in a number of different configurations: One option is a two-chip architecture, which is connected with Nvidia’s NVLink interconnect. That configuration will enable up to 144 ARM v9 compute cores. The second approach is known as the Grace Hopper Superchip, which combines the Grace CPU with an Nvidia Hopper GPU.

“Grace Hopper is built to accelerate the largest AI, HPC, cloud and hyperscale workloads,” Kharya said.

New 2U reference architecture design

As part of its Computex announcements, Nvidia also detailed 2U (2 rack unit) sized server architecture designed to help enable adoption into data centers.