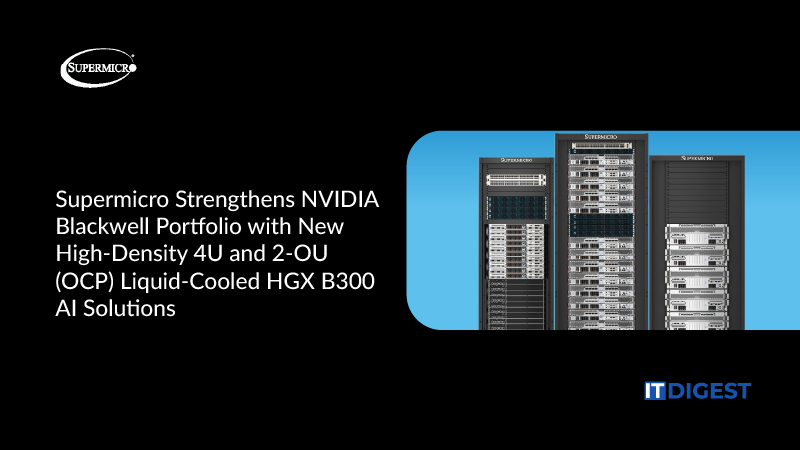

Super Micro Computer, Inc., a leading provider of AI, cloud, 5G/edge, and storage infrastructure, has broadened its NVIDIA Blackwell-based system lineup with the launch of two liquid-cooled NVIDIA HGX B300 platforms. Built to support high density requirements in hyperscale environments and AI factory operations, these systems form part of Supermicro’s Data Center Building Block Solutions® (DCBBS), offering unmatched GPU performance and energy efficiency.

“With AI infrastructure demand accelerating globally, our new liquid-cooled NVIDIA HGX B300 systems deliver the performance density and energy efficiency that hyperscalers and AI factories need today,” said Charles Liang, president and CEO of Supermicro. “We’re now offering the industry’s most compact NVIDIA HGX B300 solutions achieving up to 144 GPUs in a single rack—while reducing power consumption and cooling costs through our proven direct liquid-cooling technology. Through our DCBBS, this is how Supermicro enables our customers to deploy AI at scale: faster time-to-market, maximum performance per watt, and end-to-end integration from design to deployment.”

High-Density 2-OU (OCP) HGX B300 for Hyperscalers and Cloud Providers

The compact 2-OU (OCP) liquid-cooled NVIDIA HGX B300 solution adheres to the 21-inch Open Compute Project (OCP) Open Rack V3 (ORV3) standard. Designed for space-critical environments, the system supports up to 144 GPUs per rack, delivering extreme compute density without compromising rack serviceability. With eight NVIDIA Blackwell Ultra GPUs capable of 1,100W TDP each, the solution dramatically shrinks rack footprints and power demand.

The modular rack design features blind-mate manifolds and separate GPU/CPU trays with advanced liquid cooling, enabling seamless scalability with NVIDIA Quantum-X800 InfiniBand switching and Supermicro’s in-row coolant distribution units (CDUs). A fully built SuperCluster unit can reach 1,152 GPUs when combining compute, networking, and cooling racks.

4U Front I/O Liquid-Cooled HGX B300 for Traditional Racks

Complementing the 2-OU model, Supermicro’s 4U liquid-cooled HGX B300 system is tailored for standard 19-inch EIA racks, supporting up to 64 GPUs per rack. Leveraging Supermicro’s DLC-2 (Direct Liquid-Cooling) technology, the system captures up to 98% of generated heat, boosting power efficiency while minimizing noise and simplifying maintenance. Its design caters to dense AI training and inference clusters within traditional rack layouts.

Also Read: Advantech and D3 Embedded Partner to Boost AMRs with Sense & Compute

Key Benefits & Scalability

Both platforms deliver enterprise-grade performance improvements, equipped with 2.1TB of HBM3e GPU memory per system and doubling compute fabric throughput to 800Gb/s via integrated NVIDIA ConnectX-8 SuperNICs. When combined with NVIDIA’s high-speed InfiniBand or Ethernet ecosystem, these enhancements power complex AI workloads such as foundation model training, agentic AI applications, and large-scale multimodal inference.

With DLC-2 based systems validated as plug-and-play L11 and L12 configurations prior to shipment, Supermicro accelerates deployment timelines for hyperscale, enterprise, and government customers while meeting critical goals related to serviceability, total cost of ownership (TCO), and efficiency.

The introduction of these new systems further strengthens Supermicro’s growing NVIDIA Blackwell portfolio, which includes the NVIDIA GB300 NVL72, NVIDIA HGX B200, and NVIDIA RTX PRO 6000 Blackwell Server Edition all NVIDIA-Certified and optimized for robust AI infrastructure spanning single-node installations to full-scale AI factories.